# Support embedding YouTube Videos in Notebooks

from IPython.display import YouTubeVideo

YouTubeVideo("mYE0FhwHD-w")

Pitfalls of Feature Engineering¶

In this notebook, we present three issues you might encounter in feature engineering:

- Redundant Features: When duplicate features are introduced this can create problems when solving the normal equation.

- Too many Features: When you add more features than you have data the solution to the normal equations becomes underdetermined.

- Overfitting: When improvements in the model or features introduced to fit the model to the available data result in poor predictions on new data.

The third issue overfitting will also be the focus on the next several lectures and is the fundamental challenge in modeling.

Imports¶

In this notebook we will be using the following packages:

import numpy as np

import pandas as pd

import plotly.offline as py

import plotly.express as px

import plotly.graph_objects as go

from plotly.subplots import make_subplots

import plotly.figure_factory as ff

import cufflinks as cf

cf.set_config_file(offline=True, sharing=False, theme='ggplot');

#import torch

from sklearn.linear_model import LinearRegression

Intro Video¶

The following video walk-through presents this notebook. I did not break it into sections but will try to do that in my next notebook.

YouTubeVideo("YHRG-OyFTLM")

Toy Data and Model Setup¶

The following video provides an overview of the data and basic linear model setup.

YouTubeVideo("BJ270_CtU90")

For this problem we will use a very simple toy dataset to help illustrate where things will fail.

data = pd.read_csv("data/train.csv")

data

data_scatter = go.Scatter(x=data["X"], y=data["Y"], name="data", mode="markers")

go.Figure([data_scatter])

Fit a Basic Linear Model¶

For this notebook, we are going to implement the solution to the normal equations ourselves so that we can observe the resulting numerical issues.

from numpy.linalg import solve

def fit(X, Y):

return solve(X.T @ X, X.T @ Y)

We will need to add a ones column to our feature matrix

def add_ones_column(data):

n,_ = data.shape

return np.hstack([np.ones((n,1)), data])

Constructing the original $\mathbb{X}$ and $\mathbb{Y}$ matrices

X = data[['X']].to_numpy()

Y = data[['Y']].to_numpy()

def phi_line(X):

return add_ones_column(X)

Phi_line = phi_line(X)

Phi_line

Fit the Model¶

Solving for $\hat{\theta}$ using our Phi matrix

theta_hat_line = fit(Phi_line, Y)

theta_hat_line

Make Predictions¶

Make predictions at each of the original X values

def predict(theta, X):

return X @ theta

data['Y_hat'] = predict(theta_hat_line, Phi_line)

data

fig = go.Figure()

fig.add_trace(data_scatter)

basic_ols = go.Scatter(x=data['X'], y=data['Y_hat'], mode="lines", name="$y=mx+b$")

fig.add_trace(basic_ols)

Computing the Squared Loss¶

We compute the squared loss so we can compare with it later:

def squared_loss(Y, Y_hat):

return np.mean((Y - Y_hat)**2)

loss_line = squared_loss(Y, data[['Y_hat']].to_numpy())

loss_line

Creating a Model Class¶

Because we are going to make a number of models. We can define a simple helper class to maintain our model.

class LinearModel:

def __init__(self, phi):

self.phi = phi

def fit(self, X, Y):

Phi = self.phi(X)

self.theta_hat = solve(Phi.T @ Phi, Phi.T @ Y)

return self.theta_hat

def predict(self, X):

Phi = self.phi(X)

return Phi @ self.theta_hat

def loss(self, X, Y):

return np.mean((Y - self.predict(X))**2)

Building and training the model

model_line = LinearModel(phi_line)

model_line.fit(X,Y)

model_line.loss(X,Y)

The Issue with Redundant Feature¶

Redundant features occur when features are linear combinations of existing features.

YouTubeVideo("M11bB0Yd2is")

Suppose we were to duplicate a feature. For example, suppose we copied the original value of $X$. Here we will make the features matrix:

\begin{align} \Phi = \left[1, X, X \right] \end{align}def phi_dup(x):

return add_ones_column(np.hstack([X, X]))

Phi_dup = phi_dup(X)

Phi_dup

Note that any feature that is a linear combination of other features would be problematic. If we attempt to solve for the new $\hat{\theta}$ for this revised model we get:

try:

theta_hat_dup = solve(Phi_dup.T @ Phi_dup, Phi_dup.T @ Y)

print(theta_hat_dup)

except np.linalg.LinAlgError as err:

print(err)

The above fails because the $\Phi^T \Phi$ matrix is no longer full rank and we cannot solve for $\theta$ in the system of linear equations:

$$ \Phi^T \Phi \theta = \Phi^T Y $$We can actually check the rank of this 3x3 matrix:

Phi_dup.T @ Phi_dup

from numpy.linalg import matrix_rank

matrix_rank(Phi_dup.T @ Phi_dup)

There are actually infinitely many possible optimal solutions. We can consider the two extreme parameterizations where we ignore (set the coefficient to zero) one or the other redundant feature:

theta_a = np.array([[theta_hat_line[0,0], theta_hat_line[1,0], 0]]).T

theta_a

theta_b = np.array([[theta_hat_line[0,0], 0, theta_hat_line[1,0]]]).T

theta_b

Then we can examine the squared loss for many possible convex combinations of the two parameters.

def convex_comb(theta_a, theta_b, alpha):

return alpha * theta_a + (1-alpha) * theta_b

[squared_loss(predict(convex_comb(theta_a, theta_b, a), Phi_dup), Y)

for a in np.linspace(0, 1, 10)]

They are equivalent up to numerical precision.

Too Many Features¶

In the basic formulation of least squares regression we assumed that there was far more data than features. What if this is not the case? Let's examine what happens when we add too many features.

YouTubeVideo("iwbqbPg740I")

To illustrate this, let's introduce a feature that is 1 when we are near a particular location and decreases quickly as we move away. This is actually called a radial basis function. These are used when you might imagine something happens near a particular value. For example, if you were trying to predict when someone asks their Smart Alarm to Snooze you might place radial basis functions around common hours (e.g., 6AM, 7AM, and 8AM).

The following block of code creates a single radial basis function feature.

def rbf_feature(loc, x, beta=0.1):

return np.exp(-((loc - x)**2)/beta)

Notice that there is an additional $\beta$ hyper-parameter. This hyper-parameter determines the width of each bump.

z = np.linspace(-3,3,300)

fig = go.Figure()

fig.add_trace(go.Scatter(x=z, y=rbf_feature(0, z, beta=0.1), mode="lines", name=r"$\beta=0.1$"))

fig.add_trace(go.Scatter(x=z, y=rbf_feature(0, z, beta=1.), mode="lines", name=r"$\beta=1$"))

fig.add_trace(go.Scatter(x=z, y=rbf_feature(0, z, beta=2.), mode="lines", name=r"$\beta=2$"))

We can place these radial basis function features at different locations along the x-axis to implement multiple features.

def phi_rbf(locations, X):

return np.hstack([rbf_feature(loc, X) for loc in locations])

Suppose we start by placing four bumps at a few locations along the x-axis:

four_bump_locations = [-2, -1, 0, 1]

Phi_rbf4 = phi_rbf(four_bump_locations, X)

Phi_rbf4

Building a model

model_rbf4 = LinearModel(lambda X: phi_rbf(four_bump_locations, X))

model_rbf4.fit(X, Y)

Predicting the data and computing the loss:

model_rbf4.loss(X, Y)

Visualizing the Model¶

To visualize this model we will want to make predictions at more locations along the x-axis. We will call all these locations x_test (that is where we want to test the model).

X_test = np.expand_dims(np.linspace(X.min()-1, X.max()+1, 200), 1)

fig = go.Figure([data_scatter])

# Redraw the line plot so it extends out beyond data.

line_plot = go.Scatter(

x=X_test.flatten(),

y=model_line.predict(X_test).flatten(),

mode="lines", name="$y=mx+b$")

fig.add_trace(line_plot)

# Draw the new model

phi_rbf4_plot = go.Scatter(x=X_test.flatten(),

y=model_rbf4.predict(X_test).flatten(),

mode="lines", name="4 RBF Features")

fig.add_trace(phi_rbf4_plot)

# Draw the Bumps

for b in four_bump_locations:

fig.add_trace(go.Scatter(x=X_test.flatten(),

y=rbf_feature(b, X_test).flatten(),

name="Bump @ " + str(b),

line=dict(dash="longdash")))

fig

Notice that the Four RBF function model doesn't really follow the linear trend in the data. Let's add a the line features as well.

def phi_line_rbf(locations, X):

return np.hstack([phi_line(X), phi_rbf(locations, X)])

As before we create the transformed features:

phi_line_rbf(four_bump_locations, X)

model_line_rbf4 = LinearModel(lambda X: phi_line_rbf(four_bump_locations, X))

model_line_rbf4.fit(X,Y)

Predicting the data and computing the loss:

model_line_rbf4.loss(X,Y)

Visualizing the Model¶

Evaluating the model at X_test locations.

fig = go.Figure([data_scatter, line_plot, phi_rbf4_plot])

# Draw the new model

phi_line_rbf4_plot = go.Scatter(x=X_test.flatten(),

y=model_line_rbf4.predict(X_test).flatten(),

mode="lines", name="Line + 4 RBF Features")

fig.add_trace(phi_line_rbf4_plot)

fig

Notice above that the newest model which combines RBF features and the line features better follows the trends in the data.

Let's try to add many more features.¶

Let's place 20 evenly spaced bumps between -3 and 3.

many_bump_locations = np.linspace(-3, 3, 20)

Notice the shape of our new matrix

Phi_line_rbf20 = phi_line_rbf(many_bump_locations, X)

Phi_line_rbf20.shape

Let's fit these 9 data points to a 22 feature linear model:

model_line_rbf20 = LinearModel(lambda X: phi_line_rbf(many_bump_locations, X))

model_line_rbf20.fit(X,Y)

That worked!? Should it have worked? Recall we are solving:

$$ \Phi^T \Phi \hat{\theta} = \Phi^T \mathbb{Y} $$There should be more than one solution since we have way more variables than we have equations. Put another way the inverse operation here should not be well defined:

$$ \hat{\theta} = \left(\Phi^T \Phi \right)^{-1} \Phi^T \mathbb{Y} $$We can examine the rank and inverse of this matrix:

A = Phi_line_rbf20.T @ Phi_line_rbf20

A.shape

matrix_rank(A)

Numpy uses iterative algorithms to invert matrices and does not necessarily check that a solution was found.

from numpy.linalg import inv

inv(A) @ A

By comparison if we look at something that was more appropriate:

B = model_rbf4.phi(X).T @ model_rbf4.phi(X)

inv(B) @ B

We can still use the model to make predictions since the algorithm did return a $\hat{\theta}$

fig = go.Figure([data_scatter, line_plot, phi_rbf4_plot, phi_line_rbf4_plot])

# Draw the new model

phi_line_rbf20_plot = go.Scatter(

x=X_test.flatten(),

y=model_line_rbf20.predict(X_test).flatten(),

mode="lines", name="Line + 20 RBF Features")

fig.add_trace(phi_line_rbf20_plot)

fig.update_yaxes(range=[-5,10])

fig

Notice that despite the solution to the linear systems being not well posed, we were able to find a model that does appear to minimize the loss. When we cover regularization, we will return to how we can effectively train models when there are more features than data.

Overfitting¶

One of the big challenges is machine learning and statistics is building models that generalize beyond the data. Unfortunately, when building our models the data is all we have and we design our models to reflect the patterns in our data. However, it is possible to go too far.

YouTubeVideo("b6l9eVGERxY")

Using our earlier example with with a more reasonable number of features

bump_locations7 = [-2, -1.5, -1, -0.5, 0, 0.5, 1]

Phi_line_rbf7 = phi_line_rbf(bump_locations7, X)

Phi_line_rbf7.shape

Notice that we have 9 data points and 9 features (2 linear model features and 7 RBF features).

model_line_rbf7 = LinearModel(lambda X: phi_line_rbf(bump_locations7, X))

model_line_rbf7.fit(X, Y)

Predicting the data and computing the loss:

model_line_rbf7.loss(X, Y)

Visualizing the Model¶

fig = go.Figure([data_scatter, line_plot, phi_rbf4_plot, phi_line_rbf4_plot])

# Draw the new model

phi_line_rbf7_plot = go.Scatter(

x=X_test.flatten(),

y=model_line_rbf7.predict(X_test).flatten(),

mode="lines", name="Line + 7 RBF Features")

fig.add_trace(phi_line_rbf7_plot)

fig.update_yaxes(range=[-10,20])

fig

Did we minimize the error? Let's compare the models:

models = [model_line, model_rbf4, model_line_rbf4, model_line_rbf7]

model_names = ["Line", "4 RBF", "Line + 4 RBF", "Line + 7 RBF"]

train_loss = [m.loss(X, Y) for m in models]

go.Figure([go.Bar(x=model_names, y=train_loss)])

While we minimized the training loss with the latest model it may not have been our "best" model. Why?

Examining New (Test) Data¶

To see why the models we developed above may actually be bad models despite minimizing the loss, we collect some more data from the same underlying process:

test_data = pd.read_csv("data/test.csv")

test_data

Plotting this new data (in red) on top of the old data we see that while the more complex RBF model fit the original data perfectly, it does not fit the new

test_data_scatter = go.Scatter(name = "Test Data", x = test_data['X'], y = test_data['Y'],

mode = 'markers', marker=dict(symbol="cross", color="red"))

fig = go.Figure([data_scatter, test_data_scatter, line_plot, phi_rbf4_plot, phi_line_rbf4_plot, phi_line_rbf7_plot])

fig.update_yaxes(range=[-10,20])

fig

How do we perform on the new data? Computing the loss from before and after.

X_test = test_data[["X"]].to_numpy()

Y_test = test_data[["Y"]].to_numpy()

test_loss = [m.loss(X_test, Y_test) for m in models]

test_loss

fig = go.Figure([go.Bar(x=model_names, y=train_loss, name="Train Loss"),

go.Bar(x=model_names, y=test_loss, name="Test Loss")])

fig.update_yaxes(range=[-0.1,15])

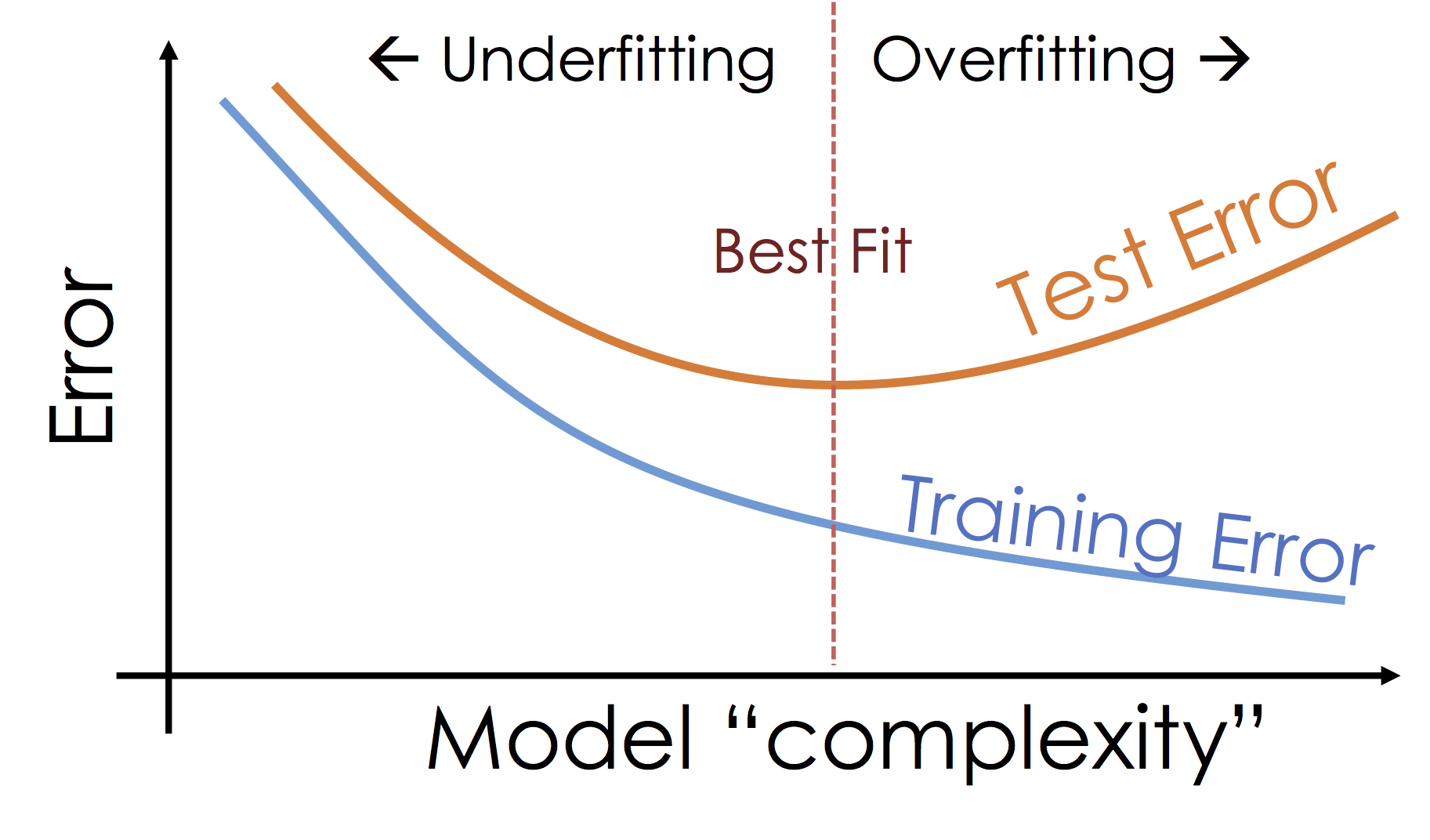

What's happening: Over-fitting¶

As we increase the expressiveness of our model we begin to over-fit to the variability in our training data. That is we are learning patterns that do not generalize beyond our training dataset

Over-fitting is a key challenge in machine learning and statistical inference. At it's core is a fundamental trade-off between bias and variance: the desire to explain the training data and yet be robust to variation in the training data.

We will study the bias-variance trade-off in feuture lectures but for now we will focus on the trade-off between under fitting and over fitting: